Here θ, x are column vectors as you will see in a moment. Θj is the jth model parameter (including the bias term θ0 and the feature weights θ1, θ2, ⋯, θn ).

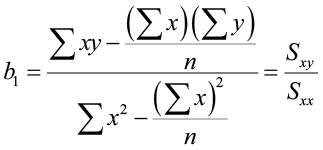

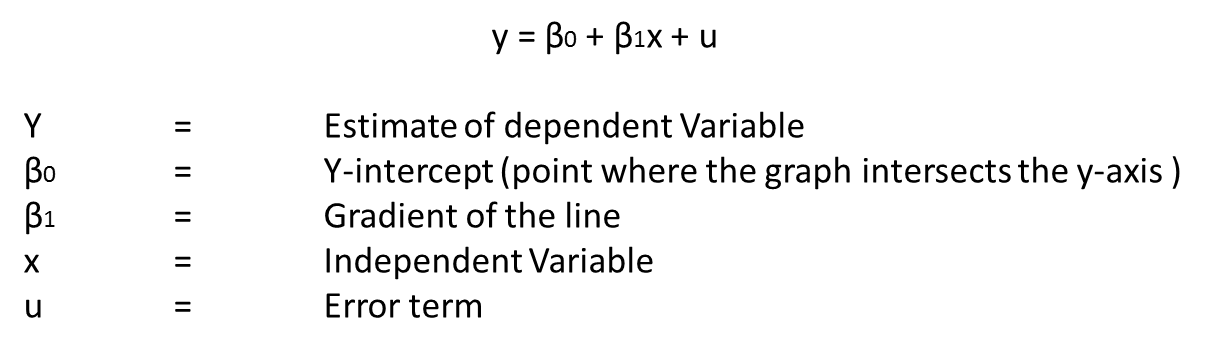

You can think of feature weights as the coefficients of the features in the linear equation. Θ is the model’s parameter vector, containing the bias term θ0 and the feature weights θ1 to θn. This contains all the feature values of a particular point except the corresponding target value. Now we focus on how a linear regression model would predict the values of an instance with the obtained relationship. How does the linear regression algorithm predict?įor now, let’s think that we have already obtained the proper linear relation between input features and labels (explained later).

OK! It’s time to dig deeper into the Linear Regression. We will learn more about it in a detailed manner later in this article. The goal of the linear regression is to find the best values for θ and b that represents the given data. Yes! The linear regression tries to find out the best linear relationship between the input and output. The linear relation between the input features and the output in 2D is simply a line. As shown above, the red line best fits that data than the other blue lines. (Left) Image by Sathwick (Right) Image by Sathwickįor now, when you think of linear regression think of fitting a line such that the distance between the data points and the line is minimum.

0 kommentar(er)

0 kommentar(er)